New G-Mapper version available

We’re pleased to announce the release of G-Mapper version 3.0.5.0. This version includes the changes previously released in the online version as well as a few enhancements and bug fixes.

We have finally decided to purchase a code signing certificate to remove some of those nasty windows warning about untrusted applications! If you can help contribute towards our costs we really appreciate your support.

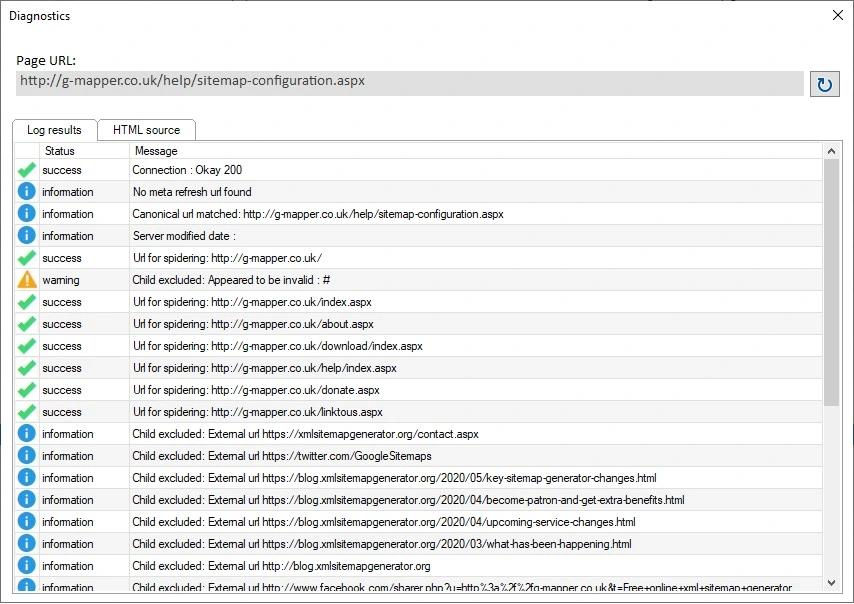

Diagnostic tool

We added a diagnostic tool to our online sitemap generator some time ago. Due to popular demand and to help with support issues, this tool is now available directly within G-Mapper.

You can use the tool to help you diagnose problems with creating your sitemap which may also impact on your websites SEO.

You can access the diagnostic tool from the main tool bar which will scan your sitemap start/home page, or using the new context menu to scan a particular page.

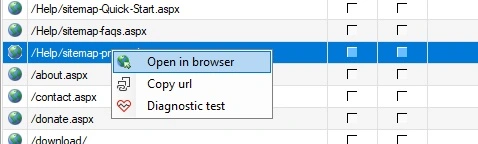

Context menu

To make managing your sitemap easier and finding pages within your website, we’ve added a context menu so when you right click you can navigate to the page or copy the url as well as accessing the diagnostic test tool.

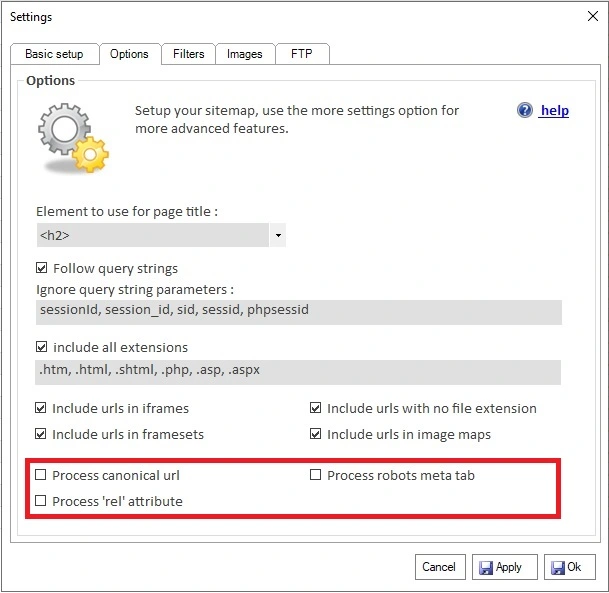

Canonical urls

As with the online sitemap generator, due to how various sites have implemented canonical urls we will no longer automatically redirect to alternate domains as this is causing problems due to misuse / poor implementations on some sites.

Instead we will process the page within the context of the current domain and issue warnings about canonical urls in the logs by default.

If you set the option to obey canonical urls we will assume you are spidering the correct domain and when we see a canonical url:

- If it matches the current page we will include the current page.

- If it is within the current domain we will ignore the current page and content and add the canonical url to the list to be spidered.

- If it is outside of the current domain we will ignore the page and content.

Robots meta and rel nofollow / no index

Again, as with the online sitemap generator, some sites end up with no pages or missing pages due to the use of the robots meta tag and rel nofollow attribute which has created negative feedback.

By default we will now ignore these and issue warnings in the logs. If you wish for our spider to obey any robots meta or rel attributes please used the advanced settings.

Other changes

We’ve changed our user agent header to imitate common browsers as some websites we’re issuing a HTTP 403 not authorised error.

Increased the max page size to 300Kb (uncompressed) as some sites were not finding urls due to their size.

We’ve also been making some general improvements to our spider code as well as some bug fixes to try and make it more reliable and robust.

We’ve added more validation around the FTP upload to make it easier to diagnose issues.

You can now reach our web diagnostic tool from the toolbar and settings page.

Bug fixes

We’ve also been resolving a number of bugs thanks to the reports you send us. Please do include your email address when you send a report as it can be really helpful in some cases to be able to email you for more information.

- Gzip and deflate compression not working causing excessive bandwidth use.

- Fixed mix date standards to YYYY-MM-DD format.

- Disable batch edit when not on correct tab to prevent errors.

- Ping utility error fixed.

- Error when trying to export to folder without write permission.

- Error exporting map with no urls.

- Crashing when checking version and cannot reach server.

Links

- Download the latest XML Sitemap Generator for Windows

- Support us by making a small contribution.